Do It in Stride

On-the-Go Walking Gesture for Interaction with Portable Mixed-Reality HMD

WORK IN PREPARATION | 18 DEC 2023

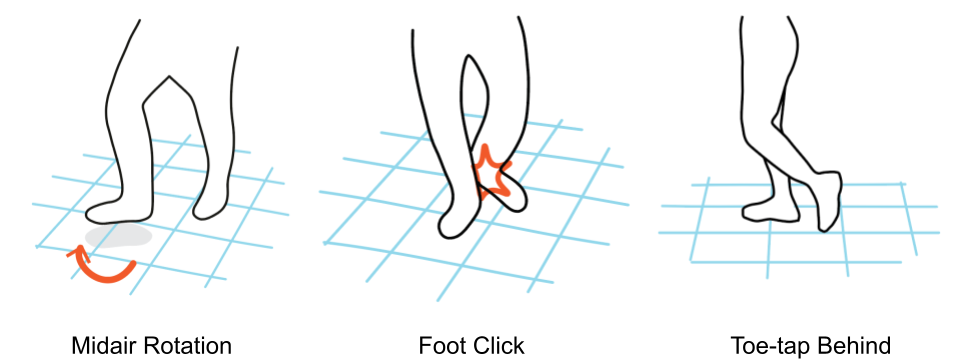

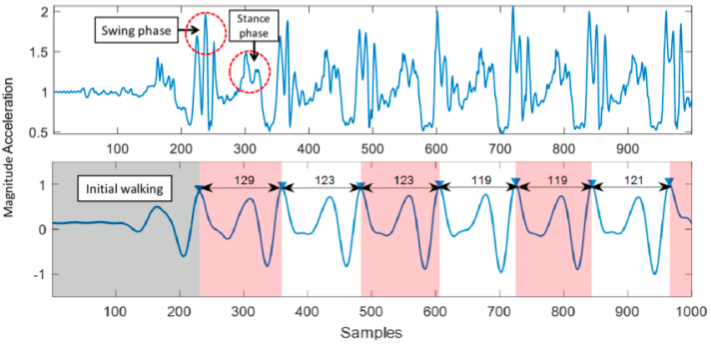

Advancements in head-mounted displays (HMDs) are making MR more wireless and portable. Interactions with MR HMDs are becoming more dynamic, often occurring on the go. However, traditional mobile interaction techniques have drawbacks: hand interactions while walking can be cumbersome while walking, voice commands may face noise and privacy issues, and eye gestures can distract from the environment. To address these, our project explores the use of foot movements itself during walking as an novel input modality. For example, walking distance can control continuous inputs like a volume slider, and a larger step can act as a discrete input for selections. We evaluated dozens of walking-based gestures, assessing their ease, social acceptability, and impact on walking pattern and speed. This research has led us to establish design principles for foot gestures tailored to walking, along with potential applications in MR environments.

(This is an ongoing project I lead, working with Prof. Daniel Vogel at University of Waterloo.)